Your annual security training teaches employees to spot grammatical errors, suspicious links, and generic greetings—but AI-powered phishing attacks no longer contain these red flags. Generative AI now crafts perfect emails that replicate your vendors’ exact communication style and terminology, making 60% of recipients unable to distinguish fake messages from legitimate ones. While you’re training your team to catch yesterday’s threats, attackers are deploying AI tools that analyze communication patterns and generate personalized attacks your current defenses weren’t designed to stop.

The $60 Million Email That Looked Completely Legitimate

In February 2024, a finance manager at a mid-sized healthcare company received what appeared to be a routine email from their pharmaceutical vendor. The sender’s address was correct. The tone matched previous conversations. Banking details needed updating—standard procedure. She verified the request by replying to the email thread. The vendor “confirmed” via return message. She processed the $60 million wire transfer.

Every message was artificial intelligence-generated in real-time. The entire email thread was fabricated using AI-generated phishing tools that analyzed previous vendor communications. No traditional email phishing filters caught it because the content was original, contextually perfect, and contained zero signature-based triggers. By the time their incident response team investigated, the funds had disappeared through cryptocurrency tumblers. The healthcare company never recovered them. Your phishing threats now look exactly like legitimate business communication.

How AI Transformed Social Engineering Overnight

Until November 2022, social engineering attacks required weeks of research, specialized technical skills, and native-level language proficiency. Then ChatGPT launched, and everything changed.

Today’s attackers use generative AI to craft perfect phishing emails in seconds—no typos, no awkward phrasing, no red flags your team was trained to spot. AI-powered cyberattacks now analyze your company’s communication style, replicate vendor terminology, and generate convincing replies that bypass traditional email filters.

The numbers prove it: 60% of recipients can’t distinguish AI-generated phishing from legitimate messages. Your cybersecurity awareness training taught employees to spot “Nigerian prince” scams, but these attacks mirror your CFO’s writing style perfectly.

Most concerning? Attackers access the same AI tools transforming every industry. While your security protocols remained static, their capabilities evolved exponentially.

The Growing Gap Between Training and Real-World Attacks

Your employees completed their annual cybersecurity training in January. By February, attackers had already deployed three new AI-powered attacks your training never covered. That’s the fundamental problem: static training versus constantly evolving threats.

Traditional security awareness programs teach employees to spot grammatical errors and suspicious links. But AI-driven security threats now generate perfect grammar, replicate behavioral patterns, and personalize messages using scraped LinkedIn data. Your team learned to recognize 2023’s threats while facing 2024’s AI-powered attacks.

Most MSPs still deliver annual training modules and consider the task complete. We’ve transitioned to continuous simulations that mirror actual attack techniques we’re seeing this month. Because effective security strategies require keeping pace with threats, not checking compliance boxes.

What AI-Native Security Actually Looks Like

When attackers adopted AI tools in 2023, we recognized that layering new solutions onto decade-old security stacks wouldn’t work. True AI-powered security required rebuilding from the ground up.

We deployed email filters that analyze behavior patterns rather than hunting for known signatures—critical when AI-generated content creates never-before-seen attacks. We replaced SMS-based authentication with phishing-resistant MFA using FIDO2 tokens that can’t be socially engineered.

Most importantly, we implemented AI anomaly detection that monitors for unusual patterns in financial transactions and data access—the warning signs traditional tools miss.

We paired this technology with continuous security training using monthly simulations based on actual AI-powered attacks we’re tracking. Annual compliance training became obsolete the moment attackers gained real-time capabilities.

Questions Every Business Leader Should Ask Their MSP Today

If your MSP can’t answer these questions clearly, you’re likely protected against yesterday’s threats while today’s AI-powered attacks bypass your defenses.

- Ask: “Do you deploy continuous learning mechanisms that adapt to evolving AI-generated attacks, or rely on annual training?” Traditional providers update defenses quarterly. Modern MSPs use ai-powered campaign automation to simulate current threat patterns monthly.

- “Are you monitoring for agentic ai systems that conduct reconnaissance and craft personalized attacks in real-time?” Most MSPs track generic phishing. You need behavioral change monitoring that flags anomalous communication patterns.

- “What’s your documented procedure when someone requests urgent wire transfers via email?” Technology alone won’t stop social engineering.

If these questions receive vague answers or promises to “look into it,” you’re vulnerable right now.

The Window for Preparation Is Closing

Your security training isn’t broken—it’s answering yesterday’s questions. While employees learn to spot typos in phishing emails, attackers deploy AI that writes more convincingly than your own executives. Training alone won’t close this gap.

The difference between businesses that survive AI-powered attacks and those that don’t comes down to one factor: whether their MSP recognized this threat early and rebuilt their security stack accordingly.

At WheelHouse IT, we made that decision in early 2024. We deployed AI-native email security, implemented phishing-resistant authentication, and redesigned our security awareness training before most MSPs acknowledged the problem existed. Our clients in healthcare, legal, and financial services aren’t wondering if they’re protected against AI-powered social engineering—they know they are, because they can see it in real-time through Enverge.

Take Action Today

If your current IT provider hasn’t had this conversation with you yet, that tells you everything you need to know.

Schedule a 15-minute security assessment to see exactly where your current defenses leave gaps against AI-powered attacks. We’ll show you what threats are targeting your industry specifically, what modern protection actually requires, and whether your current MSP is equipped to deliver it.

No pressure. No sales pitch. Just transparency about the threat landscape in 2025 and whether you’re protected against it.

[Schedule Your Security Assessment]

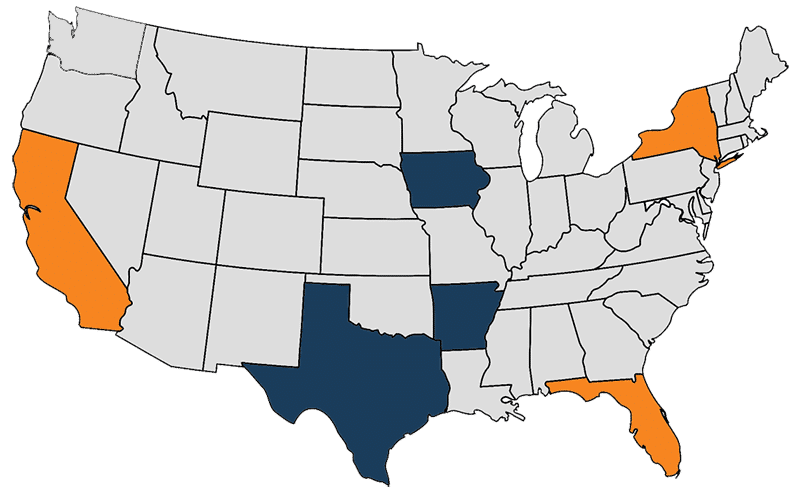

WheelHouse IT protects healthcare practices, law firms, financial services firms, and professional services companies across South Florida and New York. We don’t wait for threats to become standard before protecting our clients against them.