To deploy GenAI securely, you’ll need multi-layered defenses against five key risks: data poisoning, prompt injection, model extraction, privacy breaches, and supply chain vulnerabilities.

Implement thorough data validation, input filtering, API rate limiting, and regular penetration testing. Watermark model outputs, establish contractual protections, and maintain regulatory compliance.

With 68% of early adopters experiencing critical vulnerabilities, an extensive AI security framework will protect your valuable intellectual property and customer trust.

Understanding the AI Security Landscape

How did we arrive at a point where AI systems face such unique security challenges? The answer lies in the fundamental nature of current language models. Unlike traditional software that follows explicit instructions, today’s GenAI systems learn patterns from vast datasets, creating security risks that evolve as rapidly as the technology itself.

Your business now operates in an environment where AI capabilities and vulnerabilities grow simultaneously. These sophisticated language models can be manipulated through data poisoning, prompt injection, and model extraction—threats that didn’t exist just years ago. The unintended consequences can be devastating: compromised data, leaked intellectual property, and regulatory violations.

What makes this especially concerning is that future systems will only become more powerful, potentially escalating these vulnerabilities if security doesn’t evolve at the same pace.

Identifying GenAI Risk Areas

While enterprise AI adoption accelerates across industries, five critical risk areas demand your immediate attention. These vulnerabilities create potential entry points for attackers seeking to compromise your AI systems or extract valuable data.

Understanding these risks is the first step toward implementing sturdy security measures that protect your innovation initiatives.

- Training data vulnerabilities expose your AI to poisoning attacks that can manipulate output or create backdoors

- Prompt injection attacks bypass security controls by manipulating the inputs your AI processes

- Model extraction threats enable competitors to steal your proprietary algorithms and intellectual property

- Privacy and compliance issues create regulatory exposure and damage customer trust

- Supply chain security gaps introduce vulnerabilities through third-party AI components and services

Addressing Training Data Vulnerabilities

Three fundamental vulnerabilities plague the training data that powers your GenAI systems. First, cybercriminals can execute data poisoning attacks by introducing corrupted or misleading information into your AI’s learning environment, skewing its outputs to serve malicious purposes. Second, your training data may contain undetected biases that produce inconsistent or harmful results that damage your brand. Third, insecure data access points create openings for threat actors to extract sensitive information.

To secure your AI learning pipeline, implement rigorous data validation protocols that detect anomalies before they enter your training cycles. Deploy advanced encryption for all data in transit and at rest. Establish broad chain-of-custody tracking for every dataset that touches your AI systems. These measures create formidable barriers against the most sophisticated training data vulnerabilities while preserving your innovation momentum.

Defending Against Prompt Injection Attacks

Prompt injection attacks represent the most insidious threat to your GenAI deployment, allowing attackers to commandeer your AI systems through carefully crafted inputs that override security guardrails. These cyber threats exploit weaknesses in how AI models process instructions, potentially causing catastrophic failures when malicious prompts trigger harmful behavior in your systems.

Implement sturdy input validation to filter potentially malicious prompts. Deploy multi-layered defenses that can identify and block known prompt injection patterns. Monitor for emergent behaviors that might indicate your AI has been compromised. Establish clear boundaries for your AI system’s operational parameters. Conduct regular penetration testing specifically designed to uncover prompt vulnerabilities.

Your innovation agenda shouldn’t be hampered by security concerns, but prompt injections represent a clear and present danger that requires proactive mitigation strategies to protect your AI investments.

Safeguarding AI Models

How secure is your proprietary AI model from theft, competition, or malicious manipulation? As your organization builds custom AI solutions, those models become valuable intellectual property and trade secrets that require dedicated protection strategies.

Model extraction threats represent a significant risk where attackers systematically query your AI to reverse-engineer its capabilities. Without proper safeguards, competitors could steal your proprietary AI models through repeated interactions that reveal underlying patterns and decision processes.

Protect your AI investments by implementing rate limiting on API calls, monitoring for suspicious query patterns, and using techniques like knowledge distillation to create simplified public-facing versions of your core models. Consider watermarking your model outputs to trace unauthorized use, and establish clear contractual protections when sharing access to your AI systems.

Navigating Privacy and Compliance

The unique privacy challenges posed by generative AI systems create significant regulatory exposure for organizations deploying these technologies. You’re navigating uncharted waters where traditional privacy frameworks struggle to address AI’s ability to generate, memorize, and potentially expose sensitive information.

Establish clear data governance policies that address AI’s unique data privacy challenges. Implement privacy-by-design principles in your GenAI deployment strategy. Conduct regular compliance audits against evolving regulatory requirements. Deploy technical safeguards that prevent inadvertent PII leakage through AI outputs. Create transparent documentation of AI data usage for regulatory compliance.

Don’t let regulatory risks derail your innovation journey. With proper guideposts in place, you can leverage GenAI’s transformative potential while maintaining compliance with today’s complex privacy landscape—and be prepared for tomorrow’s evolving requirements.

Securing the AI Supply Chain

When implementing GenAI solutions, your security is only as strong as the weakest link in your AI supply chain. Many organizations focus solely on their internal AI models while overlooking vulnerabilities in their backbone infrastructure and third-party components.

Your GenAI ecosystem likely includes pre-trained models, APIs, and frameworks developed by external vendors—each representing potential security blind spots. Implement sturdy supply chain security measures by conducting thorough vendor assessments, requiring security certifications, and establishing clear data handling agreements.

Don’t neglect your backend infrastructure supporting AI operations. Regular security audits of cloud services, data pipelines, and integration points are essential. Consider implementing a zero-trust architecture that verifies every access request regardless of source, protecting your AI systems from compromise through trusted but vulnerable third-party connections.

Building an Enterprise-Grade AI Security Framework

Why do most GenAI security strategies fail? They treat artificial intelligence as just another IT system rather than recognizing generative AI as a fundamentally different beast with unique potential risks.

You need a framework specifically designed for these powerful systems.

- Layer your defenses using the “Swiss cheese” model where multiple imperfect security layers create expansive protection

- Implement continuous monitoring to detect unusual model behaviors before they lead to a security breach

- Establish clear governance protocols with dedicated AI oversight committees

- Create incident response plans specifically for generative AI failures

- Build redundant safeguards to prevent catastrophic safety risks from emerging

Your framework shouldn’t stifle innovation but rather enable it through structured guardrails that give your teams confidence to explore AI’s transformative capabilities while maintaining control.

Don’t Leave Your Business Exposed—Take Action Today

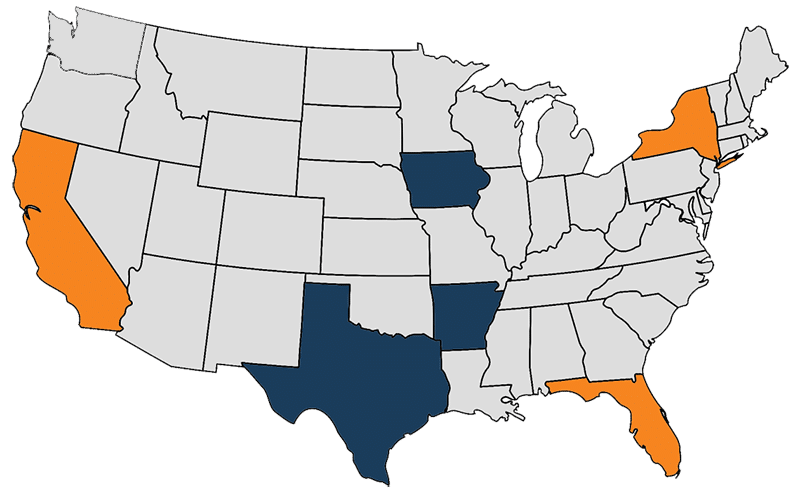

WheelHouse IT’s security framework delivers the comprehensive protection your AI initiatives require. Our layered “Swiss Cheese” approach ensures that even if attackers penetrate one security layer, multiple additional safeguards stand ready to protect your systems and data from compromise.

Transform these insights into action and harness AI’s full potential without exposing your business to catastrophic risk:

- Schedule Today to Discuss Your Security Environment

- Take the 2-Minute Security Confidence Assessment

The organizations that thrive in the AI revolution won’t be those that adopt the technology fastest—they’ll be those that adopt it most securely.

Contact WheelHouse IT today to ensure your business is among them.

Key Takeaways

- Implement rigorous data validation protocols to detect anomalies before training cycles, preventing data poisoning attacks.

- Deploy multi-layered defenses to identify and block known prompt injection patterns that could override security guardrails.

- Establish comprehensive model protection strategies including rate limiting, watermarking, and contractual safeguards.

- Monitor AI systems continuously for emergent behaviors that indicate potential compromise or vulnerability exploitation.

- Create a secure AI supply chain by vetting third-party components and maintaining strong encryption throughout the AI lifecycle.